Introduction

While many Salesforce products including Sales Cloud, Service Cloud, Education Cloud, Health Cloud and other Salesforce industry clouds are built on a common ‘core’ platform that share the same datastore (an Oracle relational database), Data Cloud uses a very different architecture and technology stack from other Salesforce ‘Clouds’. This post explains the data models and related concepts used by the platform.

Starting with the storage layer, Data Cloud includes multiple services, including DynamoDB for hot storage (so data can be supplied fast), Amazon S3 for cold storage, and a SQL metadata store for indexing all metadata. As a result, Data Cloud can provide a petabyte-scale data store, which breaks the scalability and performance constraints associated with relational databases.

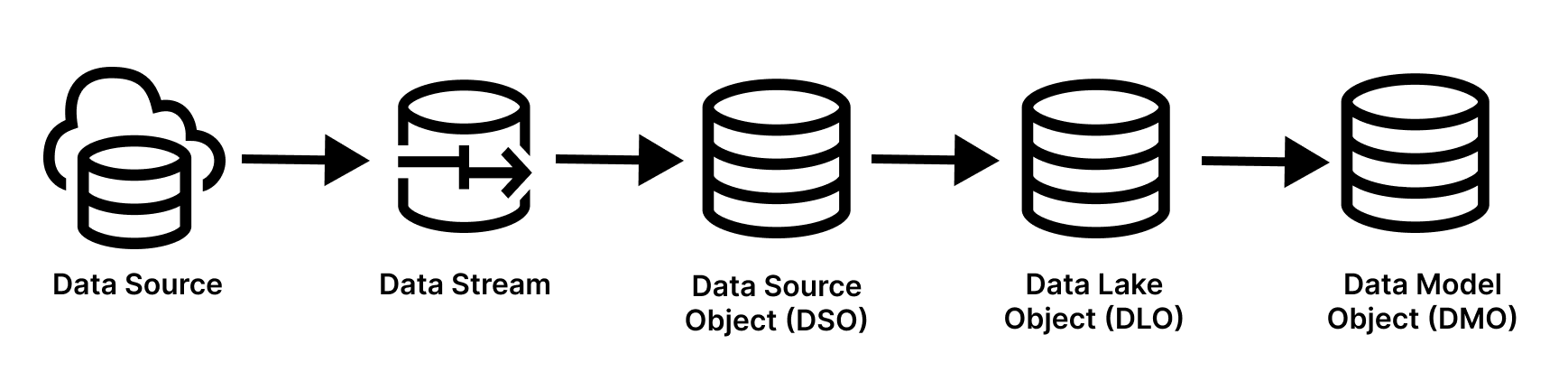

The physical architecture in Data Cloud is represented as a set of data objects and understanding these is key, as it forms the foundation in how data is ingested, harmonized and activated in the platform.

Data Source

A Data Source is the initial data layer used by Data Cloud. A Data Source represents a platform or system where your data originates from, outside of Data Cloud. These sources can either be:

- Salesforce platforms including Sales Cloud, Commerce Cloud, Marketing Cloud and Marketing Cloud Personalization

- Object storage platforms including Amazon S3, Microsoft Azure Storage and Google Cloud Storage

- Ingestion APIs and Connector SDKs to programmatically load data from websites, mobile apps and other systems

- SFTP for file based transfer

Data Stream

A Data Stream is an entity which can be extracted from a Data Source — for example, ‘Orders’ from Commerce Cloud, ‘Contacts’ from Sales Cloud, or ‘Subscribers’ from Marketing Cloud. Once a Data Source is connected to Data Cloud, Data Streams provide paths to the respective entity and require a category to be assigned; either Profile, Engagement, or Other. As a result, a single Data Source can contain multiple Data Streams.

Data Source Object

A Data Stream is ingested to a Data Source Object or ‘DSO’. This object provides a physical, temporary staging data store that contains the data in its raw, native file format of the Data Stream (for example, a CSV file). Formulas can be applied to perform minor transformations on fields at time of data ingestion.

Data Lake Object

The next data object in the data flow is the Data Lake Object or ‘DLO’. The DLO is the first object that is available for inspection and enables users to prepare their data by mapping fields and applying additional transformations. Similar to the DSO, this object also provides a physical store and it forms the product of a DSO (and any transformation).

DLOs are typed, schema-based, materialized views that reside in storage containers in the data lake (Amazon S3), generally as Apache Parquet files; an open-source, column-oriented file format designed for efficient data storage and retrieval files. On top of this, Apache Iceberg provides an abstraction layer between the physical data files and their table representation.

The adoption of these industry standard formats are worth noting, as these file formats are widely supported by other cloud computing providers, and as a result, enable external platforms to integrate to Data Cloud with a zero-copy architecture, for example, Snowflake.

Data Model Object

Unlike DSOs and DLOs which use a physical data store, a Data Model Object, or ‘DMO’, enables a virtual, non-materialized view into the data lake. The result from running a query associated with a view is not stored anywhere and is always based on the current data snapshot in the DLOs. Attributes within a DMO can be created from different Data Streams, Calculated Insights and other sources.

Unlike Data Streams, DMOs don’t have a first-class concept of category. Instead, the category are inherited from the first DLO mapped to a DMO. After the DMO inherits a category, only DLOs with that same category can map to it.

Similar to Salesforce objects, DMOs provide a canonical data model with pre-defined attributes, which are presented as standard objects, but custom DMOs can also be created (referred to as custom objects). And similar to Salesforce objects, DMOs can also have a standard or custom relationship to other DMOs, which can be structured as a one-to-one or many-to-one relationship. There are currently 89 standard DMOs in Data Cloud and continues to grow in support of other entity use cases.

DMOs are organized into different Data Object subject areas, including:

- Case for service and support cases

- Engagement for engagement with an Individual, like email engagement activity (send, open, click)

- Loyalty for managing reward and recognition programs.

- Party for representing attributes related to an individual, like contact or account information.

- Privacy to track data privacy and consent data privacy preferences for an Individual.

- Product to define attributes related to products and services (goods)

- Sales Order for defining past and forecast sales by product

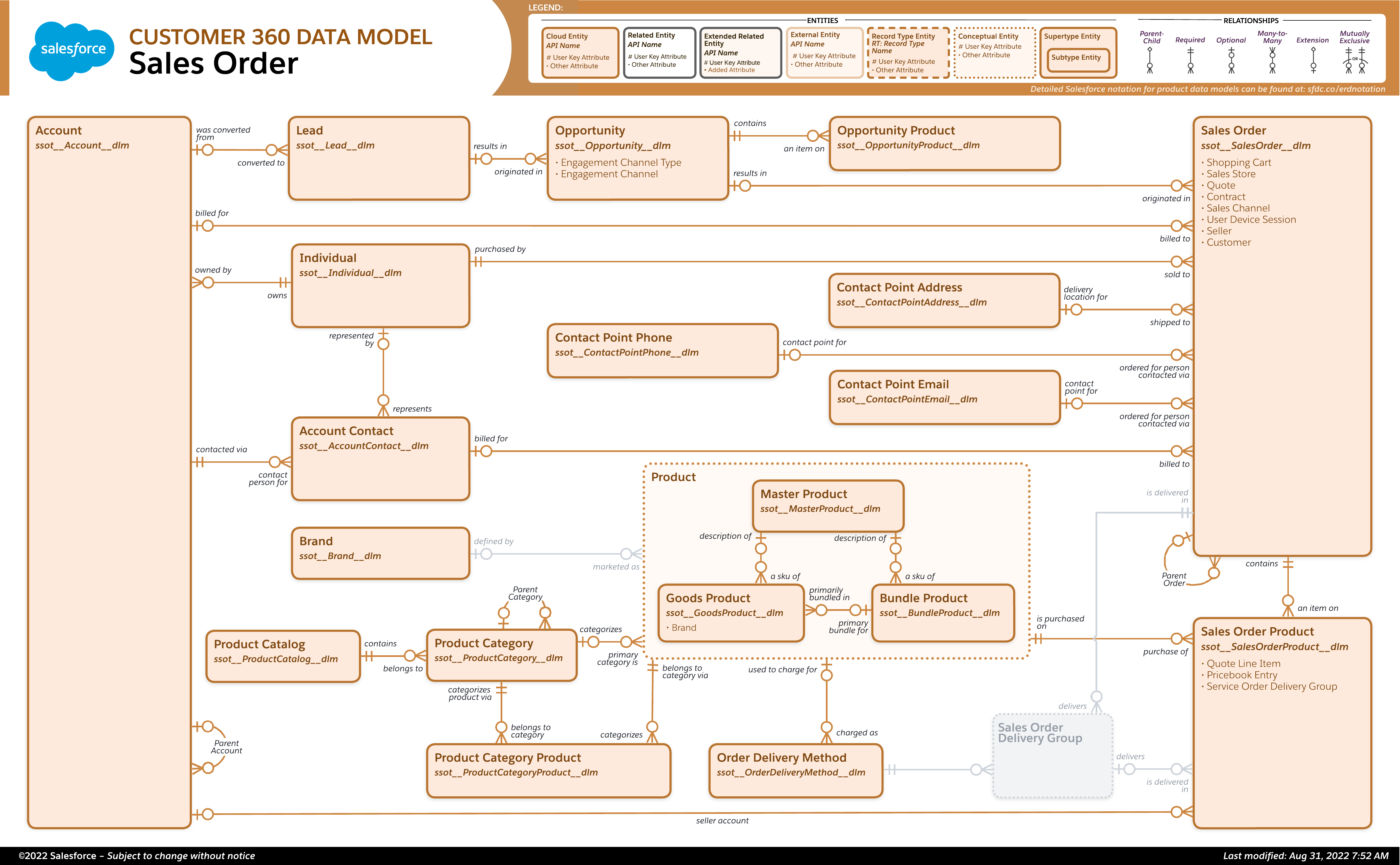

For example, the diagram below indicates the different DMOs used in the Sales Order Data Object which uses the following DMOs:

- Sales Order for information around current and pending sales orders

- Sales Order Product for attributes related to a specific product or service

- Sales Store representing a retailer

- Order Delivery Method to define different order and delivery methods for fulfillment

- Opportunity to represent a sale that is in progress

- Opportunity Product to connect an Opportunity to the Product (or Products) that it represents.

Sales Order Subject Area in Data Cloud (source: architect.salesforce.com)

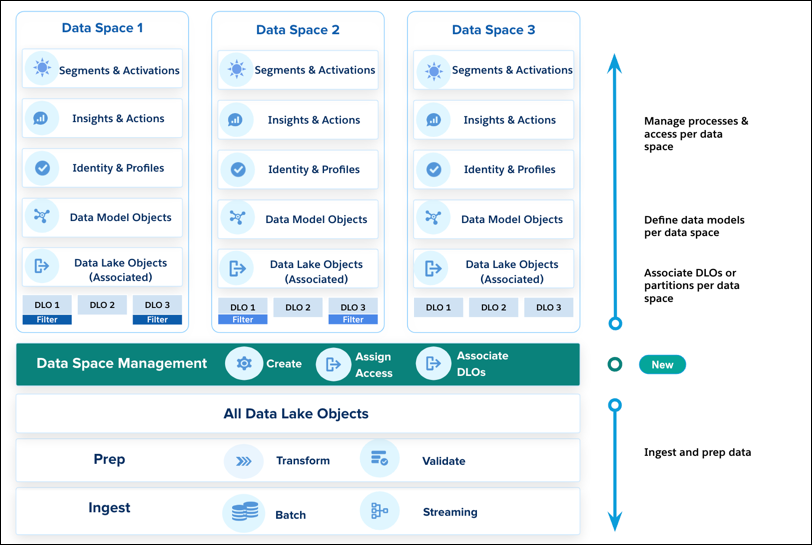

Data Spaces

Data Spaces provide logical partitions and a method of separating data between different brands, regions or departments, limiting data to users, without needing to have multiple Data Cloud instances. Additionally, Data Spaces can be used to align with a Software Development Lifecycle or SDLC, where you can stage and test Data Objects in a separate environment, without impacting production data. As illustrated below, Data Sources, Data Streams and and DLOs can be made accessible across Data Spaces, while DMOs and other platform features are isolated to users, based on permission sets.

Data Spaces in Data Cloud (source: help.salesforce.com)

Conclusion

Due in part to advancements and the drop in storage costs, companies now have gargantuan datasets at their disposal. Every time a customer makes a purchase, opens an email, or even simply views a web page, these engagement events can be captured and stored, which if you can organize properly, enables you to understand your customers, predict their needs, personalize interactions, and much more.

But like other SaaS vendors, a core challenge for Salesforce is the success of its original platform created more than 20 years ago, which was really designed for a different era and runs on a competitor’s platform. One of the main challenges that Salesforce has faced with its database and platform architecture is that it doesn’t handle voluminous or “big” data well. Data Cloud addresses the paradigms associated with its core architecture and relational databases, through a well considered architecture that addresses limitations associated with relational databases. Data Cloud is set to form the backbone of the Salesforce platform to support the data needs of their customers and product line through the next two decades.

If you would like to learn more about how to implement and integrate Salesforce Data Cloud in your organization following best practices, reach out today.